Maximizing direct methanol fuel cell performance: Reinforcement learning enables real-time voltage control

Fuel cells are energy solutions that can convert the chemical energy in fuels into electricity via specific chemical reactions, instead of relying on combustion. Promising types of fuel cells are direct methanol fuel cells (DMFCs), devices specifically designed to convert the energy in methyl alcohol (i.e., methanol) into electrical energy.

Despite their potential for powering large electronics, vehicles and other systems requiring portable power, these methanol-based fuel cells still have significant limitations. Most notably, studies found that their performance tends to significantly degrade over time, because the materials used to catalyze reactions in the cells (i.e., electrocatalytic surfaces) gradually become less effective.

One approach to cleaning these surfaces and preventing the accumulation of poisoning products produced during chemical reactions entails the modulation of the voltage applied to the fuel cells. However, manually adjusting the voltage applied to the surfaces in effective ways, while also accounting for physical and chemical processes in the fuel cells, is impractical for real-world applications.

Researchers at the Massachusetts Institute of Technology (MIT) recently developed Alpha-Fuel-Cell, a new machine learning-based tool that can monitor the state of a catalyst and adjust the voltage applied to it accordingly. The new computational tool, outlined in a paper published in Nature Energy, was found to improve the average power produced by direct methanol fuel cells by 153% compared to conventional manual voltage operation strategies.

“Fuel cells slowly lose power as they run, and it’s hard for humans to keep adjusting the controls to get the most out of them,” Ju Li, senior author of the paper, told Tech Xplore. “We asked a simple question: could an AI system watch the fuel cell in real time and keep it operating at its optimal spot, the way cruise control keeps your car at a steady speed?”

The primary objective of this recent study by Li and his colleagues was to assess the potential of artificial intelligence (AI)-based models for improving the performance of methanol fuel cells. Specifically, they wished to demonstrate that machine learning techniques can help optimize the voltage required to clean electrocatalytic surfaces, performing well not only in simulations, but also on real systems.

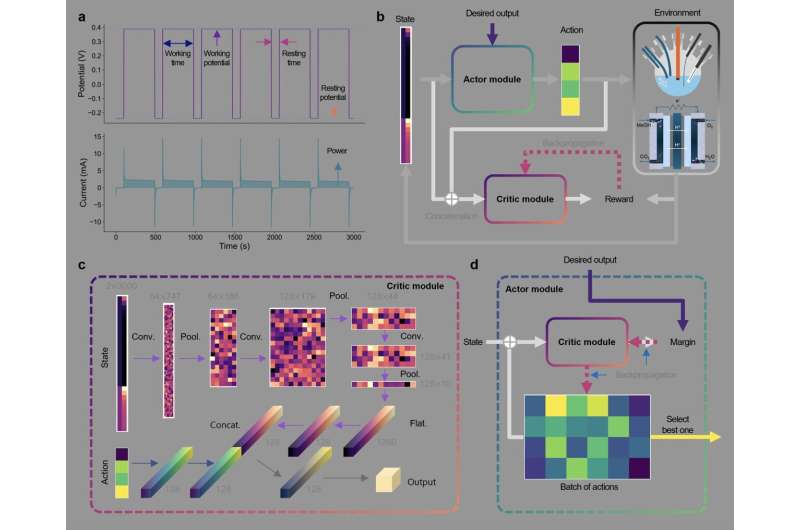

“Simply, the alpha-fuel-cell is composed of an actor, which controls the system by analyzing the fuel cell’s condition over the past running time, and a critic, which evaluates the value of actions based on the fuel cell’s state,” explains Li. “In general, the actor-critic algorithm commonly used in reinforcement learning employs separate neural networks for the actor and the critic.”

While artificial neural networks (ANNs) were found to reliably tackle several real-world tasks, they generally require large amounts of domain-specific training data. To implement their machine learning-based framework more efficiently, the researchers decided to adopt an actor-critic architecture, which consists of two algorithms (i.e., an actor and a critic) that learn new knowledge via a trial-and-error process.

“The critic is composed of two branches: a state branch for analyzing the fuel cell condition and an action branch for recognizing the actions,” said Li. “The state branch uses a convolutional neural network (CNN), a neural network structure widely used in computer vision, known for its computational efficiency. This allows us to directly use the raw trajectories of current and voltage as input.”

The critic algorithm’s so-called “action branch” relies on a standard feedforward neural network. This is a widely used artificial neural network made up of layers of interconnected nodes, with data flowing in a single direction through them.

“This model is trained to predict future outputs based on past states and current inputs,” explained Li. “On the other hand, the actor leverages the learned knowledge by incorporating the critic model within itself. Since neural networks are differentiable, it is possible to numerically calculate the current input needed to achieve the desired output. If a high output value is simply set as the goal, the model will attempt to maximize it.”

The actor-critic neural architecture employed by Li and his colleagues allowed them to tackle the task of assessing the state of catalysts and modulating the voltage applied to them without the need for extensive training data. Ultimately, they were able to achieve promising results using a relatively small dataset, containing approximately 1,000 voltage-time trajectories collected in real-world settings. It took just two weeks to collect these data in a real-world experimental setup.

“Our controller is a real-time, goal-adaptive architecture that learns directly from experimental data, and no simulator in the loop,” said Li.

“Since implementing a high-quality simulator is difficult, this is a significant advantage. This system is the first demonstration of a combination of AI and energy devices, maintaining maximum fuel cell power with automatic catalyst self-healing. The system figures out when short rests actually help the cell to recover, instead of wasting time. Any clean-energy devices (fuel cells, batteries, CO₂ electrolysis) drift and age.”

The new approach devised by this team of researchers could soon be refined further and tested in a broader range of experiments and real-world scenarios. In the future, it could help to improve the performance of direct methanol fuel cells, extending their lifetimes without requiring expensive equipment.

“We’re now scaling our approach from a single lab cell to larger, real-world stacks, adding safety and lifetime limits directly into the controller, and testing the same idea on batteries and other electrochemical systems to generalize it,” added Li.

Written for you by our author Ingrid Fadelli,

edited by Gaby Clark, and fact-checked and reviewed by Robert Egan—this article is the result of careful human work. We rely on readers like you to keep independent science journalism alive.

If this reporting matters to you,

please consider a donation (especially monthly).

You’ll get an ad-free account as a thank-you.

More information:

Hongbin Xu et al, An actor–critic algorithm to maximize the power delivered from direct methanol fuel cells, Nature Energy (2025). DOI: 10.1038/s41560-025-01804-x.

© 2025 Science X Network

Citation:

Maximizing direct methanol fuel cell performance: Reinforcement learning enables real-time voltage control (2025, August 7)

retrieved 7 August 2025

from https://techxplore.com/news/2025-08-maximizing-methanol-fuel-cell-enables.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Comments are closed