Lifelong learning will power next generation of autonomous devices

Look up “lifelong learning” online, and you’ll find a laundry list of apps to teach you how to quilt, play chess or even speak a new language. Within the emerging fields of artificial intelligence (AI) and autonomous devices, however, “lifelong learning” means something different—and it is a bit more complex. It refers to the ability of a device to continuously operate, interact with and learn from its environment—on its own and in real time.

This ability is critical to the development of some of our most promising technologies—from automated delivery drones and self-driving cars, to extraplanetary rovers and robots capable of doing work too dangerous for humans.

In all these instances, scientists are developing algorithms at a breakneck pace to enable such learning. But the specialized hardware AI accelerators, or chips, that devices need to run these new algorithms must keep up.

That’s the challenge that Angel Yanguas-Gil, a researcher at the U.S. Department of Energy’s (DOE) Argonne National Laboratory, has taken up. His work is part of Argonne’s Microelectronics Initiative. Yanguas-Gil and a multidisciplinary team of colleagues recently published a paper in Nature Electronics that explores the programming and hardware challenges that AI-driven devices face, and how we might be able to overcome them through design.

Learning in real time

Current approaches to AI are based on a training and inference model. The developer “trains” the AI capability offline to use only certain types of information to perform a defined set of tasks, tests its performance and then installs it onto the destination device.

“At that point, the device can no longer learn from new data or experiences,” explains Yanguas-Gil. “If the developer wants to add capabilities to the device or improve its performance, he or she must take the device out of service and train the system from scratch.”

For complex applications, this model simply isn’t feasible.

“Think of a planetary rover that encounters an object that it wasn’t trained to recognize. Or it enters terrain it was not trained to navigate,” Yanguas-Gil continues.

“Given the time lag between the rover and its operators, shutting it down and trying to retrain it to perform in this situation won’t work. Instead, the rover must be able to collect the new types of data. It must relate that new information to information it already has—and the tasks associated with it. And then make decisions about what to do next in real time.”

The challenge is that real-time learning requires significantly more complex algorithms. In turn, these algorithms require more energy, more memory and more flexibility from their hardware accelerators to run. And these chips are nearly always strictly limited in size, weight and power—depending on the device.

Keys for lifelong learning accelerators

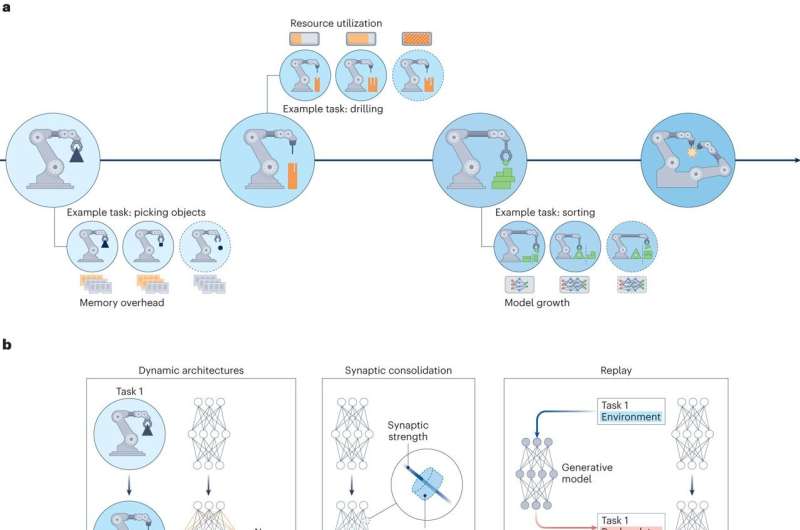

According to the paper, AI accelerators need a number of capabilities to enable their host devices to learn continuously.

The learning capability must be located on the device. In most intended applications, there won’t be time for the device to retrieve information from a remote source like the cloud or to prompt a transmission from the operator with instructions before it needs to perform a task.

The accelerator must also have the ability to change how it uses its resources over time in order to maximize use of energy and space. This could mean deciding to change where it stores certain types of data, or how much energy it uses to perform certain tasks.

Another necessity is what researchers call “model recoverability.” This means that the system can retain enough of its original structure to keep performing its intended tasks at a high level, even though it is constantly changing and evolving as a result of its learning. The system should also prevent what experts refer to as “catastrophic forgetting,” where learning new tasks causes the system to forget older ones. This is a common occurrence in current machine learning approaches. If necessary, systems should be able to revert to more successful practices if performance begins to suffer.

Finally, the accelerator might have the need to consolidate knowledge gained from previous tasks (using data from past experiences through a process known as replay) while it is actively completing new ones.

All these capabilities present challenges for AI accelerators that researchers are only starting to take up.

More information:

Dhireesha Kudithipudi et al, Design principles for lifelong learning AI accelerators, Nature Electronics (2023). DOI: 10.1038/s41928-023-01054-3

Argonne National Laboratory

Citation:

Lifelong learning will power next generation of autonomous devices (2024, January 31)

retrieved 2 February 2024

from https://techxplore.com/news/2024-01-lifelong-power-generation-autonomous-devices.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Comments are closed