Material that listens: Chip-based approach enables speech recognition and more

Speech recognition without heavy software or energy-hungry processors: researchers at the University of Twente, together with IBM Research Europe and Toyota Motor Europe, present a completely new approach. Their chips allow the material itself to “listen.” The publication by Prof. Wilfred van der Wiel and colleagues appears today in Nature.

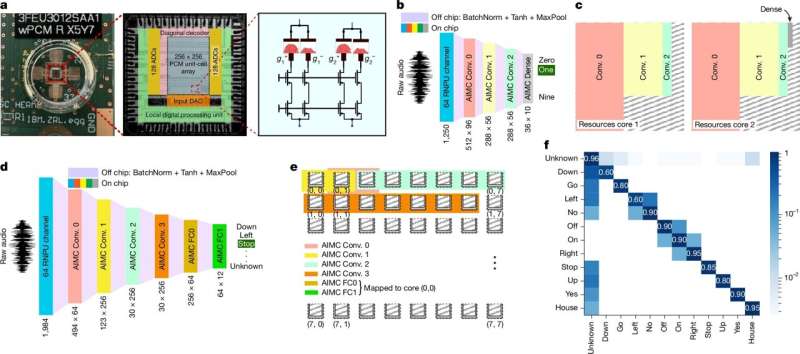

Until now, speech recognition has relied on cloud servers and complex software. The Twente researchers show that it can be done differently. They combined a Reconfigurable Nonlinear Processing Unit (RNPU), developed at the University of Twente, with a new IBM chip. Together, these devices process sound as smoothly and dynamically as the human ear and brain. In tests, this approach proved at least as accurate as the best software models—and sometimes even better.

The potential impact is considerable: hearing aids that use almost no energy, voice assistants that no longer send data to the cloud, or cars with direct speech control. “This is a new way of thinking about intelligence in hardware,” says Prof. Van der Wiel. “We show that the material itself can be trained to listen.”

Beyond speech

The technology is not limited to speech. In principle, it can process any time-dependent signal. Video, images, or continuous data streams from sensors are equally suitable. Imagine sensors that constantly measure their environment and can respond autonomously, without needing a new battery every few days or relying heavily on an internet connection. Many computational tasks can be carried out locally and energy-efficiently, making devices smarter and more independent.

The same principle could also be used to accelerate demanding AI tasks. Specific parts of complex algorithms could be embedded directly in materials, relieving the load on conventional chips. This hybrid approach would allow traditional digital circuits to work in tandem with in-materia components that handle certain tasks far more efficiently.

From lab to practice

Van der Wiel hopes the technology will not remain limited to scientific papers. “My dream is that our chips will find their way into real-world applications, such as hearing aids. A component of such a device could then be based on our technology.”

That prospect is realistic because of the materials used. The chips are based on standard silicon and work at room temperature. This makes it feasible to produce them in existing semiconductor factories. “That makes scaling up toward practical applications much more realistic,” Van der Wiel explains.

More information:

Wilfred G. van der Wiel, Analogue speech recognition based on physical computing, Nature (2025). DOI: 10.1038/s41586-025-09501-1. www.nature.com/articles/s41586-025-09501-1

University of Twente

Citation:

Material that listens: Chip-based approach enables speech recognition and more (2025, September 17)

retrieved 18 September 2025

from https://techxplore.com/news/2025-09-material-chip-based-approach-enables.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Comments are closed